A “real” man, you say?

A couple of months ago, my Instagram feed started showing me strange, AI adverts for military workouts. Possibly because I’d been researching calisthenic exercises, or maybe it was the ab-crunchie advert I made for January’s zine. Either way, more and more started pouring in.

I couldn’t stop watching. Not because I was persuaded, but because I was mesmerised. These “people” look almost human, but they live deep in the uncanny valley, and I find it hard to take my eyes off them. Shirtless men on fake podcasts, fake stages, fake vox pops. It’s bizarre, and dare I say, oddly magnetic. I stitched together a few videos from my ever-growing collection so you can see what I mean.

Have you seen anything similar to these…?

Can You Spot an AI Advert?

It seems like an easy question. The signs are usually there if you’re looking closely. But most of us aren’t. When I’ve shown friends my screengrabs, some were surprised to learn they were AI at all.

The settings feel familiar, so we might gloss over them without taking a second look. When present, even the disclaimers (“AI-generated characters for entertainment purposes”) are easy to miss. And they’re odd disclaimers, because the ads depend on us believing what we see and hear is real. The character embodies the results we’ll get if we follow their advice.

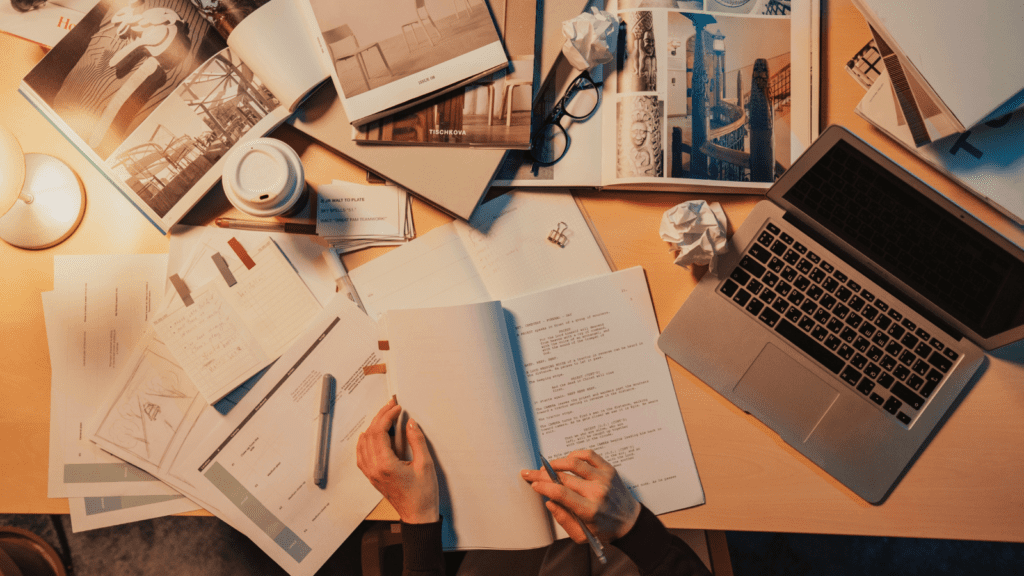

Of course, the only way to look like these “real men” is to generate an AI version of yourself in Google Veo. I just used Photoshop for this one (sorry)…

This is why I care about things like this. It is not simply the technology itself that can be troubling. It is the way it is used to manipulate trust and identity. These ads are designed to exploit belief. One byproduct is an erosion of trust: people who believe everything, and people who believe nothing. Both weaken the social bonds that rely on shared trust.

The Ecology of Artificial Intelligence

Jonathan Cook, in his 2023 piece The Ecology of Artificial Intelligence, points to an ecological metaphor: K-selected species (like whales) produce few offspring and nurture them deeply. R-selected species (like dandelions) produce vast numbers and invest little.

AI advertising behaves like dandelions: scattershot, fast, cheap, disposable. Most “seeds” will not work, but some will catch. Absurdity can even be a strategy, whether deliberate or not, because it grabs attention and stirs strong emotional reactions.

When you live in a world thick with dandelion fluff, it is harder to hold onto who you are. The noise pushes you toward quick, shallow identity shortcuts, which are precisely the kind these ads exploit.

Identity Hooks and the Unfillable Hole

The “real man” hook in these ads is just one example. The same trick works for almost any identity role: “real activists”, “real mothers”, “real writers”, or anything that gnaws at your personal sense of belonging.

It works by pointing to a lack, that feeling you do not measure up, and selling you a fix. The problem is, the lack is unfillable. AI avatars deepen the trap. They are inhuman ideal selves, immune to hypocrisy. They never get caught breaking their own rules, unlike human influencers. That makes them perfect sales tools.

Choosing the World We Want to Make

So what is the alternative? Perhaps it is choosing to create whale-spaces for ourselves and one another, spaces that are slower, deeper, and richer.

That could look like making things with our hands, meeting in person, sharing stories, and looking each other in the eye to see the complexity there. Real humans. Accountable. Messy. Lacking.